Drill在AI场景下的应用

写在前面:上周偷了懒没有更新博客,说好的坚持写作啪啪打脸,发现和懒惰做斗争真是一项艰巨又光荣的任务。

上一篇文章算一篇科普文章,讲述了SQL on Hadoop已经成为趋势,甚至SQL on MQ也开始出现。本片文章主要讲述SQL on Hadoop工具Drill在AI场景下的应用。

我为什么用Drill?

其实最开始在做技术调研的时候,我们并非使用Drill,而是现在比较流行的Hive。但随着在使用的过程中,一些问题渐渐显露出来,变得无法满足现在的需求。主要的问题出现在一下两点:

- 当数据的字段有变化时,需要不断调整表的schema,当这种变化变的频繁时,需要付出额外的精力去做schema的管理,比如如何兼容不同格式的数据。

- hive底层使用的Map Reduce去做计算,由于MR的低效,导致在数据量稍大的表上执行复杂的查询时耗时很久。

为了解决以上两点,我做了大量调研,最终采用Drill来作为我们组的OLAP工具,经过了半年的踩坑,已在部门内被算法科学家,产品运营和研发大规模使用。

先介绍下Drill吧。

官网对Drill的介绍很简洁:

Apache Drill: Schema-free SQL Query Engine for Hadoop, NoSQL and Cloud Storage

其中的关键信息时Schema-free,接下来介绍了三个特性:

Agility: Get faster insights without the overhead (data loading, schema creation and maintenance, transformations, etc.)

Flexibility: Analyze the multi-structured and nested data in non-relational datastores directly without transforming or restricting the data

Familiarity: Leverage your existing SQL skillsets and BI tools including Tableau, Qlikview, MicroStrat

即

1.敏捷: 基本不需要成本即可上手使用

2.灵活:支持分析非关系型数据库中的多种结构和嵌套的数据,不需要对数据进行转换和约束。

3.熟悉:能够和当先流行的SQL技能和BI工具结合。

另外Drill使用了MPP架构,本质上比Hive的效率要好很多。

好了让我一步步从需求开始来介绍如何使用drill的。

首先行为日志是按json格式存储, 具体格式如下:

1 | {"logType":"request","actionTime":1533979550236,"pubIp":"0.0.0.0","clientToken":"b8298c76d204483d9501a583f047ea71","ip":"0.0.0.0","sceneName":"default","businessId":"80","businessToken":"b8298c76d204483d9501a583f047ea71","serviceName":"action","userId":"8f51a10793e4eeba9bab44082abe6737e1f6","contextExist":"0","logWriteTime":1533979551521,"itemId":"1_0_12679538_1554_1533777240","requestID":"WHZVfsUu","sceneId":"635","action":"show","itemSetId":"360","logWriteTimeHuman":"2018-08-11 17:25:51"} |

安装&运行Drill

分为两种模式:

这里主要介绍分布式模式(假设有三台机器分别是m1,m2, m3)

环境准备:

(必须)运行的Oracle或者OpenJDK 8

(必须)运行的Zookeeper

(必须)运行的Hadoop集群

(推荐)使用DNS

安装:

1.下载Apache Drill的最新版本在这里

2.解压 tar -xzvf apache-drill-{version}.tar.gz

3.drill-override.conf配置文件中配置Cluster ID和zookeeper地址并拷贝到所有机器上

启动:

执行bin/drillbit.sh start

配置

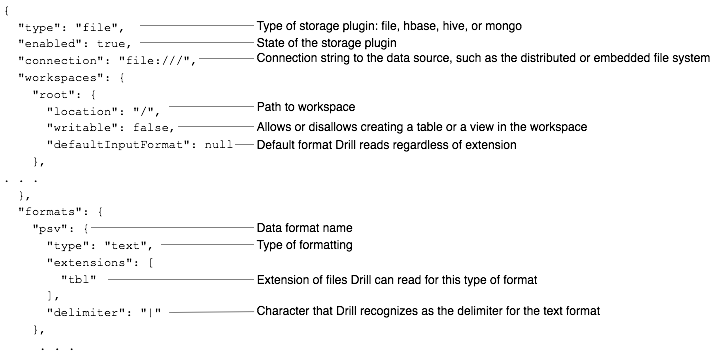

浏览器输入m1:8047,选择Storge,点击create创建新的存储插件, 输入如下配置

1 | { |

配置说明:

使用:

1.浏览器输入m1:8047访问

2.drill shell访问,终端执行./bin/sqlline -u jdbc:drill:zk=m1:2181,m2:2181,m3:2182

3.接口访问

1 | curl -X "POST" "http://m1:8047/query.json" \ |

踩坑记:

1.报错信息

1 | Expected vector class of org.apache.drill.exec.vector.NullableIntVector but was holding vector class org.apache.drill.exec.vector.NullableVarCharVector |

当要查询大量的小文件时会报上诉错误,当前版本仍然存在这个bug,希望新版本可以修复,可以利用如下方式可以极大降低问题出现的概率

修改配置

1 | ALTER system SET `exec.enable_union_type` = true; |

尽量避免查询大量碎片文件

2.如果日志文件中存在一些格式错误的日志,可以利用如下配置跳过错误日志

1 | ALTER system SET `store.json.reader.skip_invalid_records` = true; |